Mediterranean Diet and Stroke Prevention in Women

A 21-Year Study Strengthens the Case for Food as Medicine

The Mediterranean diet has long been considered one of the most evidence-backed eating patterns in modern medicine. It is consistently associated with lower risks of cardiovascular disease, Type 2 diabetes, certain cancers, cognitive decline, depression—and now, new long-term data adds powerful evidence that it may significantly reduce both ischemic and hemorrhagic stroke risk in women.

A major 21-year study published in Neurology Open Access provides some of the strongest female-specific stroke prevention data to date.

Below is a fully evidence-based breakdown using the essential W framework.

WHAT Is the Mediterranean Diet?

The Mediterranean diet is a plant-forward dietary pattern rooted in traditional eating habits of countries bordering the Mediterranean Sea.

Core Components (Scored in the Study)

Participants received one point each for:

High vegetable intake (excluding potatoes)

High fruit intake

Whole cereal grains

Legumes (lentils, chickpeas, kidney beans)

Fish

Regular olive oil use

Low meat intake

Low dairy intake

Mild-to-moderate alcohol intake (≤30g/day)

Total score: 0–9

0–2 = Low adherence

6–9 = High adherence

This scoring system reflects decades of epidemiological research linking these foods to improved vascular health.

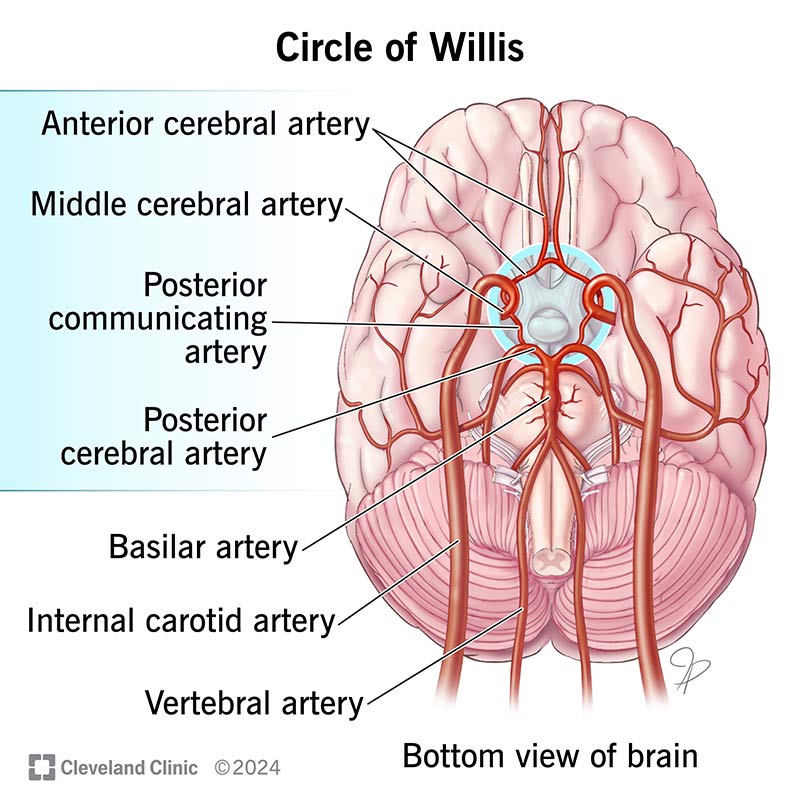

WHAT Is a Stroke?

There are two major types:

1️⃣ Ischemic Stroke (≈85% of all strokes)

Cause: Blood flow to part of the brain is blocked by a clot or narrowed artery.

Major risk factors:

Age

Diabetes

High cholesterol

Obesity

Hypertension

Atrial fibrillation

Sleep apnea

Poor diet

2️⃣ Hemorrhagic Stroke

Cause: A blood vessel in the brain ruptures, causing bleeding and tissue damage.

Major risk factors:

High blood pressure

Smoking

Heavy alcohol use

High cholesterol

Obesity

Historically, diet has been strongly linked to ischemic stroke risk—but data on hemorrhagic stroke has been limited. This study helps fill that gap.

WHO Was Studied?

Researchers from the U.S. and Greece analyzed data from 105,614 women enrolled in the California Teachers Study.

Average age at baseline: 53

No prior stroke

Follow-up period: 21 years

Total strokes documented: 4,083

3,358 ischemic

725 hemorrhagic

This is one of the largest and longest female-specific dietary stroke studies ever conducted.

WHEN Was This Research Published?

The study was published February 4 in 2025 in Neurology Open Access, adding fresh longitudinal evidence to decades of Mediterranean diet research.

WHY Does the Mediterranean Diet Reduce Stroke Risk?

The protective mechanisms are biologically plausible and well-documented.

1️⃣ Blood Pressure Regulation

Olive oil, potassium-rich produce, and reduced processed foods improve endothelial function and reduce hypertension—the strongest stroke risk factor.

2️⃣ Anti-Inflammatory Effects

Chronic inflammation contributes to arterial damage and clot formation.

Mediterranean foods are rich in:

Polyphenols

Omega-3 fatty acids

Fiber

Antioxidants

These reduce inflammatory biomarkers like CRP.

3️⃣ Improved Lipid Profiles

Lower LDL cholesterol and higher HDL improve arterial health.

4️⃣ Better Insulin Sensitivity

Reduced diabetes risk = reduced vascular damage.

5️⃣ Improved Vascular Integrity

Emerging research suggests polyphenols and omega-3s may strengthen blood vessel walls—possibly explaining the reduced hemorrhagic stroke risk.

HOW Much Risk Reduction Was Observed?

After adjusting for:

Age

Race/ethnicity

Smoking

BMI

Physical activity

Calorie intake

Hypertension

Diabetes

High cholesterol

Atrial fibrillation

Women with high Mediterranean diet adherence had:

18% lower overall stroke risk

16% lower ischemic stroke risk

25% lower hemorrhagic stroke risk

The hemorrhagic reduction is particularly striking because this subtype has historically been harder to modify through lifestyle.

WHY Is This Especially Important for Women?

Women face unique stroke dynamics:

Stroke risk increases sharply after menopause.

Women live longer, increasing lifetime exposure.

According to the Heart and Stroke Foundation of Canada:

45% more women than men die from stroke.

More women live with long-term stroke disability.

Hormonal changes post-menopause affect:

Vascular stiffness

Lipid metabolism

Blood pressure regulation

Dietary intervention becomes even more critical in midlife and beyond.

STRENGTHS of the Study

Large sample size

21-year follow-up

Detailed risk factor adjustment

Subtype-specific stroke analysis

First major study showing reduced hemorrhagic stroke risk in women

LIMITATIONS

Observational (cannot prove causation)

Self-reported dietary data

Diet assessed only at baseline

Participants were predominantly educators (may limit generalizability)

However, findings align with previous large trials such as the PREDIMED, which demonstrated cardiovascular risk reduction with Mediterranean diet intervention.

WHAT Should Women Actually Eat?

Evidence-based daily targets:

✔️ Vegetables at every meal

✔️ Fruit daily

✔️ Legumes 3–4x/week

✔️ Fish 2–3x/week

✔️ Extra-virgin olive oil as primary fat

✔️ Whole grains

✔️ Limited red meat

✔️ Minimal processed food

Alcohol, if consumed, should remain moderate (≤1 drink/day for women).

The Bottom Line

This 21-year study adds robust, subtype-specific evidence that strong adherence to the Mediterranean diet is associated with meaningful reductions in both ischemic and hemorrhagic stroke risk in women.

While it does not prove causation, the biological mechanisms, consistency across prior research, and long follow-up duration make the evidence compelling.

Stroke remains one of the leading causes of death and long-term disability in women. Unlike genetics or aging, diet is modifiable.

The Mediterranean diet is not a trend.

It is one of the most extensively studied, reproducible, and physiologically supported dietary patterns in preventive medicine.

Food is not just fuel.

It is vascular protection.

yours truly,

Adaptation-Guide